System Monitoring

Track your system performance across setups. Monitor CPU, GPU, and memory usage in real-time and get generic recommendations.

Start MonitoringModel Benchmarking

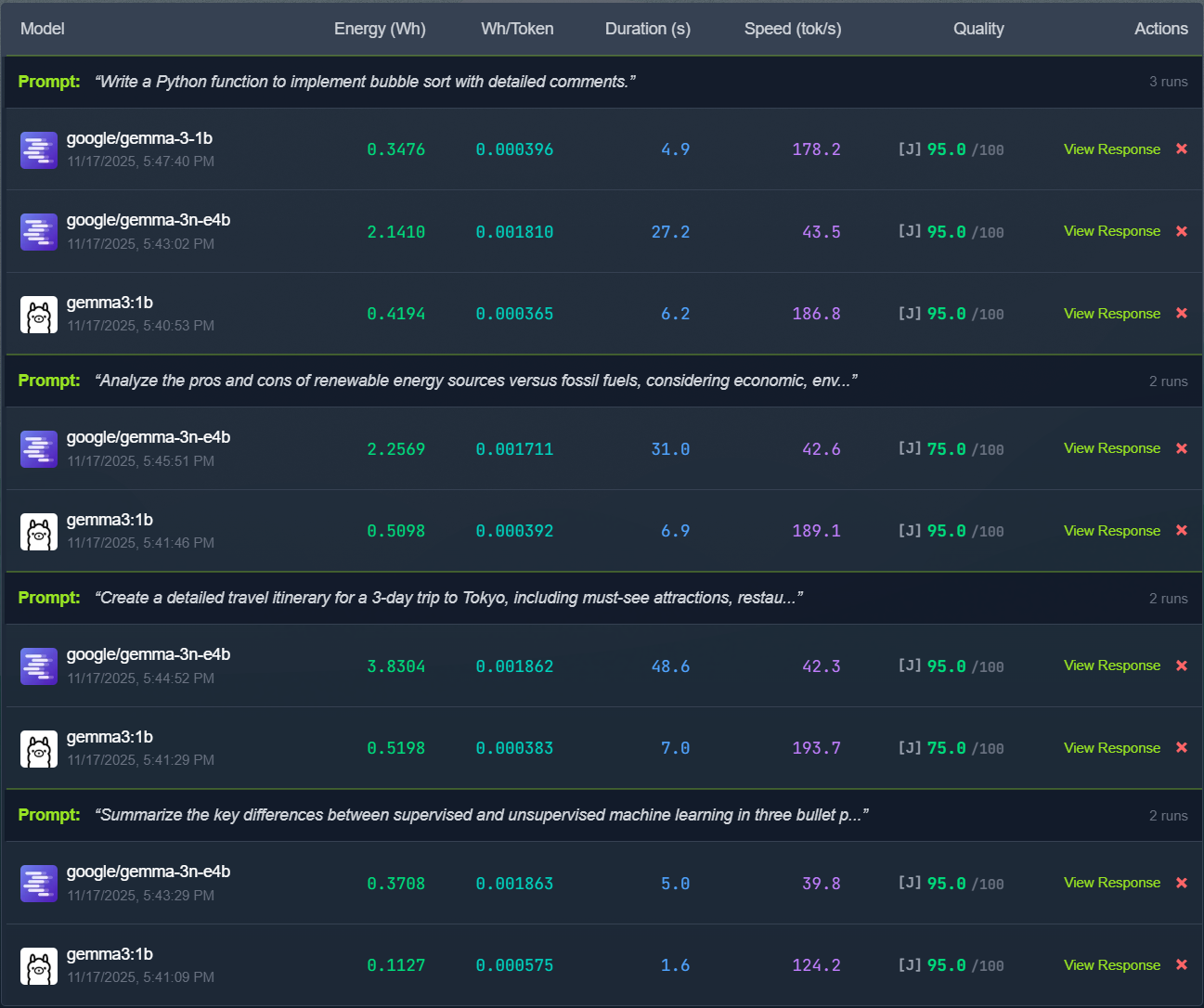

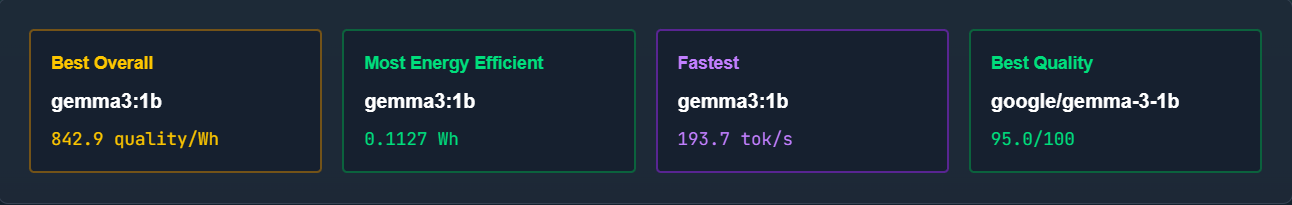

Benchmark local LLMs and compare performance. Test inference speed, resource usage, and energy efficiency across different models and prompts.

Benchmark Models